|

| |

He was among the first to advocate for the shift from "Made in China" to "Created in China,"

making pioneering contributions to promoting China's transformation from a manufacturing

powerhouse to an innovative, creative, intellectually driven nation.

In 2011, he is an active advocate, promoter of "Mass Entrepreneurship & Innovation”in China.

Upon returning to China, he proposed to the central government of China to emulate Japan's "one yen"

company registration system to lower the threshold for entrepreneurship, encourage mass innovation

and entrepreneurship, and promote the learning of the“Industry", “Academia”, and “Research”

model of developed capitalist countries, using incubators as carriers to transform technological

inventions.

Three years later, The Central Government of China launched

"Mass Entrepreneurship and Innovation." Subsequently, various innovation spaces

and incubators began to learn and draw on the experience and innovation

model of the "Creation Commune" 「创造公社」, igniting the wave of

"Mass Entrepreneurship and Innovation"「大众创业,万众创新」 in 2014

■Research Area(2006)

Cloud Computing,Big Data Science & Artificial Intelligence(AI)

Ubiquitous Computing

Virtual Reality Technology

OpenSocial API(Ex:Social Networking Services)

Abstract

"Cloud Computing" has increasingly become a term that

people are concerned, because it represents the future

of the information age. So, what is Cloud Computing ?

What is the use of Cloud Computing?How will it change

the world?Here there are many issues waiting for answers.

Although many issues are now may not have complete answers,

but this article tries to analyze the origin of cloud

computing and Cloud Computing with the grid computing,

Web2.0, ubiquitous computing relationship to illustrate

the history,development and future of Cloud Computing.

The first scholarly use of the term “Cloud Computing”

was in a 1997 lecture by Ramnath Chellappa.In 1983,Sun

Microsystems made "The Network is the computer".Until

around 2006, Cloud Computing the word began to appear

occasionally in the media magazine. March 2006,Amazon

released Elastic Compute Cloud- EC2 service.

August 9, 2006, Google Chief Executive Officer Eric

Schmidtfirst proposed the concept of Cloud Computing at the

Search Engine Conference (SES San Jose 2006).The end of 2007,

Cloud Computing the frequency is increasing rapidly. Google

and IBM promoted Cloud Computing project in the U.S. college

campuses, including Carnegie Mellon University,MIT, Stanford

University, University of California at Berkeley and the

University of Maryland, etc., , IBM and Google, two companies

each to contribute 2000 to 2500 million U.S.dollars.Provided

approximately 400 advance about the computer, and plans to

eventually place a total of more than 4,000 computer

equipment. These computers connected with six universities,

including University of Washington in Seattle, the

University take part as thelead research and development

programming. This project hopes to reduce academic research

costs of the distributed computing technology, and provide

relevant software and hardware equipment and technical support

for these universities. (Including hundreds of personal

computers and Blade Center and System X servers,these computing

platform will provide 1600 processors, support includes Linux,

Xen, Hadoop and other open-source platform). The Students can

develop the web-based large-scale computing research projects.

In early 2008,Cloud Computing in the Chinese language began to

be translated as “云计算”or“曇端計算”.

(1)Source of Cloud Computing - Amazon EC2 product

In fact, Em2 product launches than IBM, Google early. Although

Amazon EC2 is a heavyweight product in the Cloud Computing

market.But in 2006 and 2007, the Amazon's own influence is very

limited in the world.So the Amazon EC2 cloud product to the

popularity of the concept is less than IBM-Google project

attention. But this does not prevent the EC2 Cloud Computing

products as a pioneer status in the world. Although the early

development of the concept of Cloud Computing, Amazon on the

popularity of the concept of little contribution, but with the

development of Cloud Computing,Amazon's strength and the level

is higher than the IBM and Google from the beginning in the

Cloud Computing areas.

August 2006, Amazon released EC2 Beta version of the product,

had previously published another important product S3.EC2 is

an abbreviation of Elastic Compute Cloud.S3 means Simple

Storage Service.At the time of its release, EC2 was called Simple

Compute Service, not as a category name, but as a product name

that introduced "Cloud" as an alternative service concept.

Of course,called Elastic Compute Cloud Computing

is clearly inappropriate. The original reports on the EC2

product launches,including one to the title as the "Amazon

Cloud Computing goes Beta", most of the other described as

Utility, Elastic, Virtualized.

October 2007, with IBM and Google are some of the projects

themselves as Cloud Computing, Cloud Computing began to spread

rapidly (within limited IT). At that time, IBM, Google parallel

computing project is still in the research and scientific

purposes, the customer only to find EC2 is a very commercial

Cloud Computing product. As Amazon's AWS products include a lot

of Cloud Computing services, which also laid the Amazon as a

Cloud Computing market leader position.

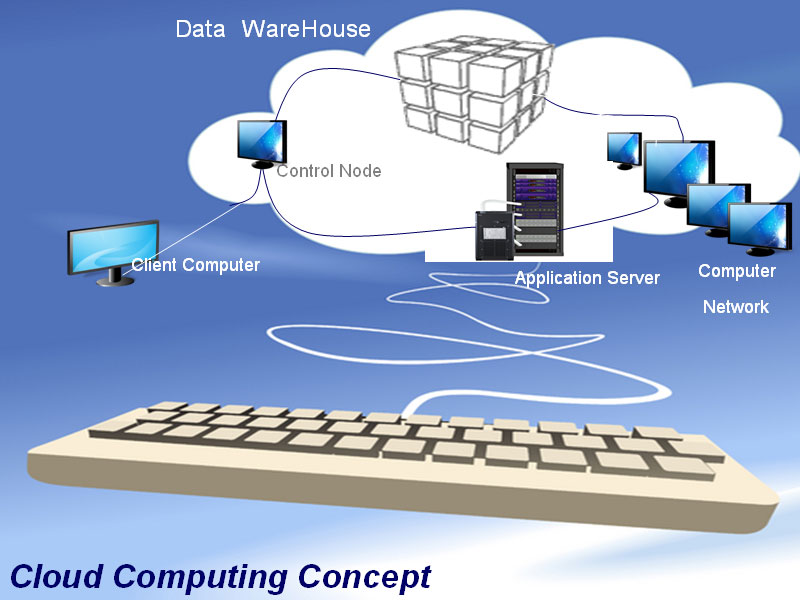

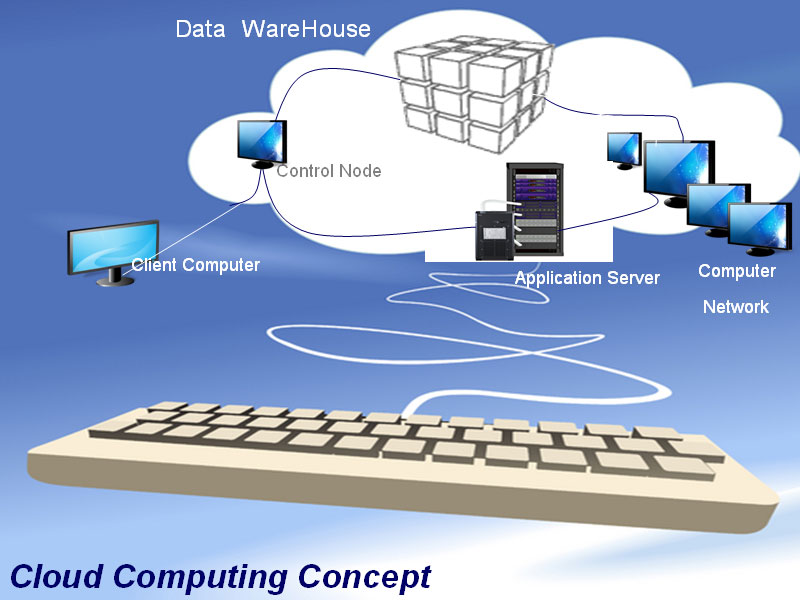

(2)Definition of Cloud Computing

Cloud Computing is a very cool web application model.

The proposal of the concept,to overturn the traditional concept

of the Internet world. Cloud Computing, in a narrow sense,

refers to IT infrastructure delivery and usage patterns, where resources

are obtained on-demand over the network in a scalable manner.

generalized Cloud Computing refers to the mode of

service delivery and use of means to on-demand through the

network,Easy way to obtain the necessary expansion of services.

This service can be IT and software, Internet-related,can be

any other services, it has a very large scale, virtualization,

security, reliable unique effect.

(3)Timeline for History of Cloud Computing

1983 Sun Microsystems,Inc,.Found

1994 Amazon.com Establishment

1995 Yahoo!Inc,, Found

1998 Google Search Found

1999 Salesforce.com Found

2001 IBM,Grid Computing Initiative Announced

2005 Article by Dr. Tim O'Reilly "What Is Web2.0?" released

2006 Amazon S3 Start

Google CEO Eric Schmidt said the "Cloud" of keywords

Twitter Start

Amazon EC2 Start

2007 Microsoft SkyDrive Start

Google, IBM and a number of universities embarked on

a large scale Cloud Computing research project.

2008 Microsoft Windows Azure (Ray Ozzie- Chief Software

Architect of Microsoft.)

Google App Engine

2009 Oracle's acquisition of Sun Corporation

Xerox acquisition of Affiliated Computer Services

2010 More Start (Salesforce.com and Mare jointly launched

More Services)

Oracle Epagogic Elastic Cloud

2.The advantages of Cloud Computing.

(1) lower-cost

This is a quantitative finance advantages: You do not need

high performance (high-performance personal computer

corresponding to the high price) of the computer to run

Web-based cloud application.Because all of the applications

and operation in the cloud, not in their personal computer,

so you do not need install operating system software of the

traditional personal computer (such as Microsoft windows

system.Unix,,,), and more do not need hard disk space for

storage. Therefore, Cloud Computing client computer can be

cheap, with a smaller hard drive, less memory, more

efficient processors. In fact, the client computer don' t

even need CD or DVD drive in this case, Because it don’t

load the software program, and do not need save any

documents.

(2) Higher Performance

Use Cloud Computing, which means don’t need to store and

run the PC-based software applications, no cumbersome

procedures to take up computer memory, computer users

will get better their performance. In short, cloud

computing system startup and running speed more fast than

personal computer, Because it is just load small procedures

and processes into memory.

(3) lower IT infrastructure costs.

In the larger of organization, through the use of cloud

computing model, IT departments to reduce cost. No need

to invest more powerful servers, IT staff can use the

Cloud Computing capabilities to supplement or replace

internal computing resources. Those with peak traffic

needs of companies no longer need to purchase equipment

to respond to peak (rest of the time idle); use of

computers and servers in the cloud, the peak computing

needs will be met easily.

(4)Less maintenance or management

From the viewpoint of maintenance costs, no matter what

size company, Cloud Computing have greatly reduced the

maintenance costs of hardware and software. The first is

hardware. As the organization requires less hardware

(fewer servers), decreased maintenance costs immediately.

The second is software maintenance, we know that all the

cloud-based applications to other places, so the

organization's computer have no software, so there is

unnecessary IT engineer or staff to maintain.

(5)Lower software costs.

Cloud Computing is the most attractive advantage of the

cost of the software. Without the need to purchase a

software package for the organization’s each computer,

and only those who actually use the applications employees

need to access applications in the cloud. Even using

Web-based applications similar to desktop software, the

cost will be much lower than now to buy the software

(Example, to buy Microsoft software). IT personnel saved

each desktop installation and maintenance costs of these

programs.

In fact, many companies are now very low cost or even

free.These companies (such as Google) are free to provide

their Web-based applications. It is more attractive to

individuals or large enterprises of spending high prices

to buy soft Microsoft software or similar desktop software

and application from vendors.

(6)Real-time update for software

Another Cloud Computing software-related advantage is

that users worry about a few upgrades of software and to

pay high cost no longer. For Web-based applications,

updates happen automatically, and users log on the cloud

to work when the next time. And more, whenever you visit

a fee-based Web application, you always use the latest

version of the update, without having to pay fees or to

download for updates.

(7)Enhanced computing power.

This is obvious. When you connect to a Cloud Computing

system, You can free to dominate the power of the whole

cloud.You no longer limited to a single computer can do,

using thousands of computers and the server capacity,

you can perform tasks like supercomputing. In other words,

in the cloud, you can try a bigger task than the

traditional desktop.

(8)Unlimited to storage capacity.

The Cloud Computing provide almost unlimited storage

capacity. Imagine your desktop or notebook computer is

about to run out of storage space. And the cloud can be

used in hundreds of PB (100 million gigabytes) capacity

compared to your computer's 200GB hard drive-based.

That’s so insignificant. Whether you need to save

something,you have enough space.

(9)Enhanced data security.

All your data is stored in the cloud it? It has been

somewhere in the cloud. And desktop computing is

different compare with the traditional desktop

environment. In the past, when your computer hard

drive crashes in the desktop environment, it will destroy

all your valuable data.

But the Cloud Computer crash will not affect your data

storage. Because of the cloud data is automatically copied,

so there will never be any loss. This also means that even

if your PC crashes, all your data is still stored in the

clouds, you can still visit. In a few desktop users regularly

back up their data world.The Cloud Computing can guarantee

or maintain the security of data.

(10)Improve compatibility between the operating system.

Have you ever tried to make Mac to communicate with based

Windows system? or let a Linux machine to share data with a

Windows computer? I believe you will hate this process. Using

Cloud Computing need not be so, in the clouds, the operating

system is not important. You can connect your Windows computer

to the cloud, and running Apple Mac OS, Linux or UNIX computer

to share files. In the clouds, the important thing is the data,

not the operating system.

(11)Improved file format compatibility.

Use of Cloud Computing, you do not worry about your machine

is compatible with documents created in other user application

or operating system. (For example:Word2007 document can not

be run Word2003 to open ) Web-based application of cloud

computing can be compatible with all formats files of created

by all users.When everyone sharing data or application in the

cloud, Format is not compatible with the problem, no longer

exists.

(12)Group collaboration easier.

Working together for many users, so multiple users successfully

work together on common projects online.That function of

computer is one of the most important advantages at present.

Just image, There is an important development project between

China and Japan. And require them to immediately face to face

communication to solve development problems. Before the

appearance of the Cloud Computing, you have to send the relevant

documents from one user to another members by email.

Today, if you using Cloud Computing is unnecessary do it.

You can access project documentation each other by real time,

a user edits content can be automatically reflected on other

user’s screen.This is of course entirely possible, because

the documentation in the clouds and not in the personal

computer. All of you need is a computer with Internet

connection, and then you will be able to collaborate.

Of course, the majority of the group collaboration means

faster to complete larger project. It also makes group

projects across different geographic locations. Groups no

longer must stay the same office in order to have a

better result. And use of Cloud Computing, any body, any

low(Regardless of your identity and status) can

collaborate in real time.

(13)The universal access to the document.

For example, a holiday in own home, suddenly thought

of forgetting important files in the school. Or go to

a computer center of school just forgot important data.

That's no problem,use of Cloud Computing, you do not

have to carry documents. Instead, as long as you have

a computer and Internet ,you can access them from

anywhere. And no matter where you are, all of your

documents are ready for use. Therefore, there is no

need to carry documents - as long as you can be

connection Internet , simple as that.

(14)Eliminates the reliance on specific equipment.

Finally, here is the ultimate advantage of cloud

computing. You no longer dependent on a single

computer or network. Replacement of computer, with

clouds, existing applications and documentation will

follow you. Switch to a portable device, your

application and documentation are still available.

There is no need to purchase special equipment for

the specific version of the program, or device-

specific format according to your document. No matter

what kind of computer you use, your documents and

create their procedures are the same.

6.The Future of Cloud Computing

“640K ought to be enough for anybody.”

(Source: http://en.wikiquote.org/wiki/Bill_Gates)

Bill Gates 1989, talking about "computer science in

the past present and future," It is we all know

that the procedures are very small province in the

end of 1980s, 100MB of Hard disk almost used up.

At that time, the Internet has begun to developed,

but it has not been widely available and popular in

the world. Meanwhile, the era of digital cameras,

digital cameras, digital music, 3D movies almost

did not. Therefore, the storage space requirements

can not be compared with today's level. This is a

mistake to judge of the future of IT industry for

Bill Gates, The judge let Microsoft has been only

concerned with software development, while

ignoring the Internet.

Today, computer users need a common standard is:

low-end Core Duo - 1GB, hard drives memory

size-100GB, and soon will enter the MB-level

hard disk drive use of the family.

With the rapid development of Internet

technology, Many things are easily solved by

the internet. Such as online watching TV,

listening to music, writing reports, store travel

photos, share new ideas and so on. As time goes by,

we gradually find their favorite experience movies,

music, pictures, always trying to collect and save

them for later reuse. Now, with cloud storage, you

can free storage of all the material according to

your need.

Cloud Computing is considered the next revolution in

science & technology industry, it will bring a

fundamental change of working methods and business

model. First, Cloud Computing means great business

opportunities to small and medium enterprises(SMEs),

they can make use of Cloud Computing at a higher

level, and as a platform compete with large companies.

Since 1989, Microsoft launched the Office business

software; the way of ours work has undergone great

changes. Today, Cloud Computing technology has

revolutionized the world of Microsoft monopoly

situation. Because the Cloud Computing is bringing

clouds Office, It has more computing power, and no

need to buy software, without having to install

and maintain for local computer.

Secondly, Cloud Computing means the end of the

hardware. At least, the small and medium enterprises

(SMEs) no longer to buy expensive hardware to

computing for increasing demand, but they just rent

Cloud Computing from vendors, thus avoiding large

investments in hardware costs. Meanwhile, the

company's technical department is also not required

to bear the technical maintenance of headache, and

they can save a lot of time for more business

innovation and creation.

Take the Amazon for example, the Cloud Computing

products cheaper (of course profitable), attracted

a large number of small and medium enterprises, or

even "New York Times ", Red Hat, Scandisk and other

large companies to join. Amazon provides 1G storage

fee for each 15 cents, the server's lease is 10

cents an hour. Allegedly its "cloud " in the

investment of only $ 300 per computer, Allegedly

its "cloud " in the investment of only $ 300

computer, if power consumption is 300 U.S. dollars,

Amazon earnings are $ 876calculated according to

the charges in the case of uninterrupted year's.

The profit margin of about 45% - higher than the

gross sales of books.

With the rapid development of Cloud Computing,

the traditional hardware manufacturers have to face

crisis once again. Dell, HP, SUN and other companies

worry about the U.S. market has been declining,

perhaps following the decline of the hardware

market is really coming.

Cloud Computing on the impact on the business model

is mainly reflected in the market space of

innovation. Harvard Business School professor

Christensen believes that Cloud Computing means to

return mainframe era from the PC-era In the PC era,

PC offers a lot of good applications and functions,

now back to the mainframe era. Now the mainframe

can never be seen or touched, but it is true that

the mainframe put in there, and them in the clouds.

(1)Future prospects

Imagine what happens, when the computer's

computing power is no longer limited by the local

hardware. Smaller size, lighter weight, able to

handle more powerful mobile devices. We are fully

capable use notebook as paper-thin and light, but

it still to run the most demanding online games,

and can access the Photoshop application and edit

photos through the mobile phone online.

More attractive, companies can get very highly

computing power by low cost investment, no longer

need to purchase expensive hardware, and without

having burden of frequent maintenance and

upgrades for your terminal equipment.

For example, the real estate corporation (Gossipy)

wants to create a database to calculate 670,000

families acquired the property data in 12 years,

in order to provide consumers with better proposals.

If they construct all from hardware to software

construction by their own, initial estimates, it

takes 6 months and one millions of dollars to

achieve this goal.

In the end, they chose Amazon's "Elastic Computer

Cloud"service.use of powerful data analysis

capabilities by Amazon, completion of the project

is only 3 weeks, costs less than 5 million. This

is the charm and power of Cloud Computing. That

is, the calculation of distribution according to

need to give full play to the performance of

large-scale computer cluster. If you only use 5%

of the resources, just to pay the price 5%, you do

not need to pay 100% of the equipment rather as

in the past.

(2)Standard of Cloud Computing

Standard on Cloud Computing, companies are

concerned about their standards. Including

Amazon, IBM, Microsoft, Sun IT Giant corporations.

The benefits of entering the market as early

possible is obvious: to win a good public

relations image and attract the concern of

academia and industry, through continuous

development of new technology to push cloud

computing market.More importantly, Cloud Computing

is considered the user from the desktop into

the Internet, a key step in pieces, who won the

battle, will win the rule-making power at a

critical period of change the rules.

Therefore not difficult to understand, Amazon

and Sun, why it is so actively join in this

competition. In 2007, Amazon opened up

"Flexible Cloud Computing" service to

developers, so buy small software companies

can demand the processing power of Amazon

data centers. So the small software companies

can buy data space according to company's needs.

Sun introduced the "black box" program. The

program is based on cloud theory, saying that

future data center, no longer be confined to the

crowded and stuffy rooms, but one removable

container, companies can move it to the "suburbs,"

including a variety of place in order to reduce the

room costs.

Microsoft and Google is the most important role in

this race. As we all know, Google has been trying

to provide more computing power and services for

users through internet, in order to subvert

Microsoft's desktop monopoly.Google promote cloud

computing platform as a form of open source, which

means that users can get the platform code and

modify it. This is regarded as a favorable way to

promote Cloud Computing. Eric Schmidt believed that

“90% of the computing tasks can be completed

through Cloud Computing”.

It is obvious that the major companies are carving

up the big cake of Cloud Computing. However, we

believe that the ultimate beneficiary is the users,

Cloud Computing will take us into a new world, the

really change our lifestyle in the future.

MORE INFORMATION >>>>

--------------------------------------------------

References:

(1) http://en.wikipedia.org/wiki/Cloud_computing

(2)Michael Miller, 《Cloud ComputingWeb-Based

Application that Change the Way You Work and

Collaborate Online 》China Machine Press,

May 2010.PP: 12-15

(3)John W.Rittinghouse,James F.Ransome

《Cloud Computing –Implementation,Management,Security》

China machine press,2010.05 .charpter 7,p124.

(4)http://gihyo.jp/design/serial/01/tcloud/0004

(5) http://en.wikiquote.org/wiki/Bill_Gates

(6)http://www.netvalley.com/archives/mirrors/davemarsh

-timeline-1.htm

(7) http://www.slideshare.net/DSPIP/cloud-computing-

introduction-2978287

(8)http://www.cioinsight.com/c/a/Strategic-Tech/Cloud-

Computing-Anything-as-a-Service/

(9) http://itknowledgeexchange.techtarget.com/data-

center-facilities/tag/google-data-center/

(10) http://www.focus.com/articles/hosting-bandwidth/

top-10-cloud-computing-trends/

(11)Yang Wenzhi《Cloud Computing Technologies and

Architecture 》Chemical Industry Press of China.2010.10.

.charpter 3,p43-76;charpter 7,p227-240.

(12)Zhang Weiming,TangJianFeng, LuoZhiguo.

《Cloud Computing》China Science Press.2010,01.

charpter 4,p104-107.

(13)Jan Van Dijk《The Network Society》Second Edition.

SAGE Publications.2006. charpter 3,p58.

Others/Appendix:

Computer System GUI:

GUI is a type of user interface which allows people to

interact with a computer and computer-controlled devices.

Instead of offering only text menus, or requiring typed

commands: graphical icons, visual indicators or special

graphical elements called "widgets", are presented.

Often the icons are used in conjunction with text, labels

or text navigation to fully represent the information and

actions available to a user. The actions are usually

performed through direct manipulation of the graphical

elements.

The term GUI is historically restricted to the scope of

flat display screens with display resolutions capable of

describing generic information,in the tradition of the

research at Palo Alto Research Center (PARC). The term

does not apply to other high resolution types of

interfaces that are non-generic, such as videogames, or

not restricted to flat screens, like volumetric displays.

History of the GUI

Data is From Wikipedia.

The graphical user interface, understood as the use of

graphic icons and a pointing device to control a computer,

has over the last four decades a steady history of

incremental refinements built on some constant core

principles. Several vendors have created their own

windowing systems based on independent code but sharing

the same basic elements that define the WIMP paradigm.Even

though there have been important technologic achievements,

enhancements to the general interaction were given in

small steps over the previous systems and there have been

few significant breakthroughs in terms of use,as the same

organizational metaphors and interaction idioms are still

in use.

Initial developments

The concept of a windowing system was introduced by the

first real-time graphic display systems for computers:

the SAGE Project and Ivan Sutherland's Sketchpad.

Augmentation of Human Intellect (NLS)

Doug Engelbart's Augmentation of Human Intellect project

at SRI in the 1960s developed the On-Line System (NLS),

which incorporated a mouse-driven cursor and multiple

windows. Engelbart had been inspired, in part, by the

memex desk based information machine suggested by

Vannevar Bush in 1945. Much of the early research was

based on how young humans learn.

Xerox PARC

Engelbart's work directly led to the advances at Xerox

PARC. Several people went from SRI to Xerox PARC in the

early 1970s. The Xerox PARC team with Merzouga Wilberts,

codified the WIMP (windows, icons, menus and pointers)

paradigm, first pioneered on the Xerox Alto experimental

computer, but which eventually appeared commercially in

the Xerox 8010 ('Star') system in 1981.

Apple Lisa and Macintosh

Beginning in 1979, started by Steve Jobs and led by Jef

Raskin, the Lisa and Macintosh teams at Apple Computer

(which included former members of the Xerox PARC group)

continued to develop such ideas. The Macintosh, released

in 1984, was the first commercially successful product to

use a GUI. A desktop metaphor was used, in which files

looked like pieces of paper; directories looked like file

folders; there were a set of desk accessories like a

calculator,notepad, and alarm clock that the user could

place around the screen as desired; and the user could

delete files and folders by dragging them to a trash can

on the screen. Drop down menus were also

introduced.

There is still some controversy over the amount of

influence that Xerox's PARC work, as opposed to previous

academic research, had on the GUIs of Apple's Lisa and

Macintosh, but it is clear that the influence was

extensive, because first versions of Lisa GUIs even lacked

icons. These prototype GUIs are at least mouse driven, but

completely ignored the WIMP concept. Rare screenshots of

first GUIs of Apple Lisa prototypes are shown here. Note

also that Apple was invited by PARC to view their research,

and a number of PARC employees subsequently moved to Apple

to work on the Lisa and Macintosh GUI. However, the Apple

work extended PARC's considerably,adding manipulatable icons

and a fixed menu bar and direct manipulation of objects in

the file system (see Macintosh Finder) for example. A list

of the improvements made by Apple to the PARC interface can

be read here (folklore.org)

DESQview

DESQview was a text mode multitasking program introduced in

July 1985. Running on top of MS-DOS, it allowed users to run

multiple DOS programs concurrently in windows. It was the

first program to bring multitasking and windowing

capabilities to a DOS environment in which existing DOS

programs could be used. DESQview was not a true GUI but

offered certain components of one, such as resizable,

overlapping windows and mouse pointing.

Graphical Environment Manager (GEM)

Environment Manager) was a windowing system created by

Digital Research, Inc. (DRI) for use with the CP/M

operating system on the

Intel 8088 and Motorola 68000 microprocessors. Later versions

ran over DOS as well.

GEM is known primarily as the graphical user interface (GUI)

for the Atari ST series of computers, and was also supplied

with a series of IBM PC-compatible computers from Amstrad.

It was the core for a small number of DOS programs, the most

notable being Ventura Publisher. It was ported to a number of

other computers that previously lacked graphical interfaces,

but never gained popularity on those platforms. DRI also

produced FlexGem for their FlexOS realtime operating system.

Digital Research (DRI) created the Graphical Environment

Manager as an add-on program for personal computers. GEM was

developed to work with existing CP/M and MS-DOS operating

systems on business computers

such as IBM-compatibles. It was developed from DRI software,

known as GSX, designed by a former PARC employee.

The similarity to the Macintosh desktop led to a copyright

lawsuit from Apple Computer, and a settlement which involved

some changes to GEM. This was to be the first of a series of

'look and feel' lawsuits related to GUI design in the 1980s.

GEM received widespread use in the consumer market from 1985,

when it was made the default user interface built in to the

TOS operating system of the Atari ST line of personal

computers. It was also bundled by other computer

manufacturers and distributors, such as Amstrad. The GEM

desktop faded from the market with the withdrawal of the Atari

ST line in 1992.

Amiga Intuition

The Amiga computer was launched by Commodore in 1985 with a

GUI called Workbench based on an internal engine which drives

all the input events called Intuition, and developed almost

entirely by RJ Mical. The first versions used a garish

blue/orange/white/black default palette, which

was selected for high contrast on televisions and composite

monitors.Workbench presented directories as drawers to fit in

with the "workbench" theme. Intuition was the widget and

graphics library that made the GUI work. It was driven by user

events through the mouse,

keyboard, and other input devices.

Due to a mistake made by the Commodore sales department,

the first floppies of AmigaOS which were released with

Amiga1000 named the whole OS "Workbench". Since then, users

and CBM itself referred to "Workbench"as the nickname for the

whole AmigaOS (including Amiga DOS,Extras, etc.). This common

consent ended with release of version 2.0 of AmigaOS, which

re-introduced proper names to the installation floppies

of AmigaDOS, Workbench, Extras, etc.).

Early versions of AmigaOS did treat the Workbench as just

another window on top of a blank screen, but this is due to

the ability of AmigaOS to have invisible screens with a

chromakey or a genlock - one of the most advanced features

of Amiga platform - even without losing the visibility of

Workbench itself. In later AmigaOS versions Workbench could

be set as a borderless desktop.

Amiga users were able to boot their computer into a command

line interface (aka. CLI/shell). This was a keyboard-based

environment without the Workbench GUI. Later they could

invoke it with the CLI/SHELL command LoadWB which performs

the task to load Workbench GUI.

Like most GUIs of the day Amiga's Intuition followed Xerox,

and sometimes Apple's lead, but a CLI was included which

dramatically extended the functionality of the platform.

Later releases added more improvements, like support for

high-color Workbench screens and 3D icons. Often Amiga

users preferred alternative interfaces to standard

Workbench, such as Directory Opus, or ScalOS interface.

An interesting article about these replacements is

available here (in French language).

The use of improved, third party GUI engines became common

amongst users who preferred more attractive interfaces --

such as Magic User Interface (MUI), and ReAction. These

Object Oriented graphic engines driven by "classes" of

graphic objects and functions were then standardized into

the Amiga environment and changed Amiga Workbench to a

complete and modern guided interface, with new standard

gadgets, animated buttons, true 24bit-color icons,

increased use of wallpapers for screens and windows,

alpha channel, transparencies and shadows as any modern

GUI requires.

Modern derivatives of Workbench are Ambient for MorphOS,

ScalOS, Workbench for AmigaOS 4.0 and Wanderer for AROS.

There is a brief article on ambient and descriptions of

MUI icons, menus and gadget here (aps.fr) and images of

Zune stay at main AROS site

Microsoft Windows

In 1983 Microsoft announced its development of Windows,

a graphical user interface (GUI) for its own operating

system (MS-DOS) that had shipped for IBM PC and

compatible computers since 1981.

The first independent version of Microsoft Windows,

version 1.0, released on November 20, 1985, lacks a

degree of functionality and achieved little popularity.

It was originally going to be called Interface Manager,

but Rowland Hanson, the head of marketing at Microsoft,

convinced the company that the name Windows would be

more appealing to consumers. Windows 1.0 is not a

complete operating system,but rather extends MS-DOS and

shares the latter's inherent flaws and problems.

Furthermore, legal challenges by Apple limited its

functionality. For example, windows can only appear

"tiled" on the screen; that is, they cannot overlap or

overlie one another. Also, there is no trash can

(place to store files prior to deletion),since Apple

believed they owned the rights to that paradigm.

Microsoft later removed both of these limitations by

signing a licensing agreement.

Microsoft Windows version 2 came out on December 9,

1987, and proved slightly more popular than its

predecessor. Much of the popularity for Windows 2.0

came by way of its inclusion as a "run-time version"

with Microsoft's new graphical applications, Excel

and Word for Windows. They can be run from MS-DOS,

executing Windows for the duration of their activity,

and closing down Windows upon exit.

Microsoft Windows received a major boost around

this time when Aldus PageMaker appeared in a Windows

version, having previously run only on Macintosh.

Some computer historians date this, the first

appearance of a significant and non-Microsoft

application for Windows, as the beginning of the

success of Windows.

Versions 2.0x uses the real-mode memory model,

which confines it to a maximum of 1 megabyte of

memory. In such a configuration, it can run

under another multitasker like DESQview, which

use the 286 Protected

Mode.

Later, two new versions were released:

Windows/286 2.1 and Windows/386 2.1. Like

previous versions of Windows,

Windows/286 2.1 uses the real-mode memory model,

but was the first version to support the HMA.

Windows/386 2.1 has a protected mode kernel with

LIM-standard EMS emulation, the predecessor to

XMS which would finally change the topology of

IBM PC computing. All Windows and DOS-based

applications at the time were real mode, running

over the protected mode kernel by using the

virtual 8086 mode, which was new with the 80386

processor.

Version 2.03, and later 3.0, faced challenges

from Apple over its overlapping windows and

other features Apple charged mimicked the

"look and feel" of its operating system and

"embodie[d] and enerate[d] a copy of the

Macintosh" in its OS.Judge William Schwarzer

dropped all but 9 of the 189 charges that Apple

had sued Microsoft with on January 5, 1989.

Success with Windows 3.0

Microsoft Windows scored a significant success

with Windows 3.0, released in 1990.In addition

to improved capabilities given to native

applications, Windows also allows a user to

better multitask older MS-DOS based software

compared to Windows/386, thanks to the

introduction of virtual memory. It made PC

compatibles serious competitors to the Apple

Macintosh. This benefited from the improved

graphics available on PCs by this time (by

means of VGA video cards), and the

Protected/Enhanced mode which allowed Windows

applications to use more memory in a more

painless manner than their DOS counterparts

could. Windows 3.0 can run in any of Real,

Standard, or 386 Enhanced modes, and is

compatible with any Intel processor from the

8086/8088 up to 80286 and 80386. Windows 3.0

tries to auto detect which mode to run in,

although it can be forced to run in a specific

mode using the switches: /r (real), /s

(standard) and /3 (386 enhanced) respectively.

This was the first version to run Windows

programs in protected mode,

although the 386 enhanced mode kernel was an

enhanced version of the protected mode

kernel in Windows/386.

Due to this backward compatibility, Windows

3.0 applications also must be compiled in

a 16-bit environment, without ever using the

full 32-bit capabilities of the 386 CPU.

A "multimedia" version, Windows 3.0 with

Multimedia Extensions 1.0, was released several

months later. This was bundled with "multimedia

upgrade kits", comprising a CD-ROM drive and a

sound card, such as the Creative Labs Sound

Blaster Pro. This version was the precursor to

the multimedia features available in Windows

3.1 and later, and was part of the

specification for Microsoft's specification for

the Multimedia PC.

The features listed above and growing market

support from application software developers

made Windows 3.0 wildly successful, selling

around 10 million copies in the two years

before the release of version 3.1.

Windows 3.0 became a major source of income

for Microsoft, and led the company to revise

some of its earlier plans.

A step sideways: OS/2

During the mid to late 1980s, Microsoft and

IBM had co-operatively been developing OS/2 as

a successor to DOS. OS/2 would take full

advantage of the aforementioned Protected Mode

of the Intel 80286 processor and up to 16MB of

memory. OS/2 1.0, released in 1987, supported

swapping and multitasking and allowed running

of DOS executables.

A GUI, called the Presentation Manager (PM),

was not available with OS/2 until version 1.1,

released in 1988. Its API was incompatible with

Windows. (Among other things, Presentation

Manager placed X,Y coordinate 0,0 at the

bottom left of the screen like Cartesian

coordinates, while Windows put 0,0 at the top

left of the screen like most other computer

window systems.) Version 1.2, released in 1989,

introduced a new file system, HPFS, to replace

the FAT file system.

By the early 1990s, conflicts developed in the

Microsoft/IBM relationship.They cooperated

with each other in developing their PC

operating systems, and had access to each

other's code. Microsoft wanted to further

develop Windows, while IBM desired for future

work to be based on OS/2. In an attempt to

resolve this tension, IBM and Microsoft agreed

that IBM would develop OS/2 2.0, to replace

OS/2 1.3 and Windows 3.0, while Microsoft

would develop a new operating system,

OS/2 3.0, to later succeed OS/2 2.0.

This agreement soon however fell apart, and

the Microsoft/IBM relationshipwas terminated.

IBM continued to develop OS/2, while Microsoft

changed the name of its (as yet unreleased)

OS/2 3.0 to Windows NT. Both retained the

rights to use OS/2 and Windows technology

developed up to the termination of the

agreement; Windows NT, however, was to be

written anew, mostly independently.

After an interim 1.3 version to fix up many

remaining problems with the 1.x series,

IBM released OS/2 version 2.0 in 1992. This

was a major improvement: it featured a new,

object-oriented GUI, the Workplace Shell

(WPS), that included a desktop and was

considered by many to be OS/2's best

feature. Microsoft would later imitate much

of it in Windows 95. Version 2.0 also

provided a full 32-bit API, offered smooth

multitasking and could take advantage of the

4 gigabytes of address space provided by the

Intel 80386. Still, much of the system still

had 16-bit code internally which required,

among other things, device drivers to be

16-bit code as well. This was one of

the reasons for the chronic shortage of OS/2

drivers for the latest devices. Version 2.0

could also run DOS and Windows 3.0 programs,

since IBM had retained the right to use the

DOS and Windows code as a result of the

breakup.

At the time, it was unclear who would win

the so-called "Desktop wars". But in

the end, OS/2 did not manage to gain enough

market share, even though IBM released

several improved versions subsequently

Windows 3.1 and NT

In response to the impending release of

OS/2 2.0, Microsoft developed Windows

3.1, which includes several minor

improvements to Windows 3.0 (such as

display of TrueType scalable fonts,

developed jointly with Apple), but

primarily consists of bugfixes and

multimedia support. It also excludes

support for Real mode, and only runs on

an 80286 or better processor. Later

Microsoft also released Windows 3.11,a

touch-up to Windows 3.1 which includes

all of the patches and updates that

followed the release of Windows 3.1 in

1992. Around the same time, Microsoft

released Windows for Workgroups (WfW),

available both as an add-on for existing

Windows 3.1 installations and in a

version that included the base Windows

environment and the networking extensions

all in one package. Windows for

Workgroups includes improved network

drivers and protocol stacks, and support

for peer-to-peer networking. One

optional download for WfW was the

"Wolverine" TCP/IP protocol stack, which

allowed for easy access to the Internet

through corporate networks. There are

two versions of Windows for Workgroups,

WfW 3.1 and WfW 3.11. Unlike the

previous versions, Windows for

Workgroups 3.11 only runs in 386

Enhanced mode, and requires at least an

80386SX processor.

All these versions continued version

3.0's impressive sales pace. Even though

the 3.1x series still lacked most of the

important features of OS/2, such as long

file names, a desktop, or protection of

the system against misbehaving

applications, Microsoft quickly took over

the OS and GUI markets for the IBM PC.

The Windows API became the de-facto

standard for consumer software.

Meanwhile Microsoft continued to develop

Windows NT. The main architect of the

system was Dave Cutler, one of the chief

architects of VMS at Digital Equipment

Corporation (later purchased by Compaq,

now part of Hewlett-Packard). Microsoft

hired him in 1988 to create a portable

version of OS/2, but Cutler created a

completely new system instead. Cutler

had been developing a follow-on to VMS

at DEC called Mica, and when DEC dropped

the project he brought the expertise and

some engineers with him to Microsoft.

DEC also believed he brought Mica's code

to Microsoft and sued. Microsoft

eventually paid $150 million U.S. and

agreed to support DEC's Alpha CPU chip

in NT.

Windows NT 3.1 (Microsoft marketing

desired to make Windows NT appear to

be a continuation of Windows 3.1) arrived

in Beta form to developers at the July

1992 Professional Developers Conference

in San Francisco. Microsoft announced at

the conference its intentions to develop

a successor to both Windows NT and Windows

3.1's replacement (code-named Chicago),

which would unify the two into one

operating system. This successor was

codenamed Cairo. In hindsight, Cairo was a

much more difficult project than Microsoft

had anticipated, and as a result,

NT and Chicago would not be unified until

Windows XP, and still, parts of Cairo

have not made it into Windows as of today.

Specifically, the WinFS subsystem,

which was the much touted Object File

System of Cairo, which had been put on

hold for a while, but Microsoft further

announced that they've discontinued WinFS

and they'll gradually incorporate the

technologies developed for WinFS in other

products and technologies, notably,

Microsoft SQL Server.

Driver support was lacking due to the

increased programming difficulty in

dealing with NT's superior hardware

abstraction model. This problem plagued

the NT line all the way through Windows

2000. Programmers complained that it was

too hard to write drivers for NT, and

hardware developers were not going to go

through the trouble of developing drivers

for a small segment of the market.

Additionally, although allowing for good

performance and fuller exploitation of

system resources, it was also

resource-intensive on limited hardware,

and thus was only suitable for larger,

more expensive machines. Windows NT would

not work for private users because of its

resource demands; moreover, its GUI was

simply a copy of Windows 3.1's, which was

inferior to the OS/2 Workplace Shell,

so there was not a good reason to propose

it as a replacement to Windows 3.1.

However, the same features made Windows

NT perfect for the LAN server market

(which in 1993 was experiencing a rapid

boom, as office networking was becoming

a commodity), as it enjoyed advanced

network connectivity options, and the

efficient NTFS file system. Windows NT

version 3.51 was Microsoft's stake

into this market, a large part of which

would be won over from Novell in the

following years.

One of Microsoft's biggest advances

initially developed for Windows NT

was new 32-bit API,to replace the legacy

16-bit Windows API.This API was called

Win32, and from then on Microsoft referred

to the older 16-bit API as Win16. Win32 API

had three main implementations: one for

Windows NT, one for Win32s (which was a

subset of Win32 which could be used on

Windows 3.1 systems), and one for Chicago.

Thus Microsoft sought to ensure some degree

of compatibility between the Chicago

design and Windows NT, even though the two

systems had radically different

internal architectures. Windows NT was the

first Windows operating system based

on a hybrid kernel.

Windows 95

After Windows 3.11, Microsoft began to

develop a new consumer oriented version

of the operating system code-named Chicago.

Chicago was designed to have support for

32-bit pre-emptive multitasking like

OS/2 and Windows NT, although a 16-bit

kernel would remain for the sake of backward

compatibility. The Win32 API first

introduced with Windows NT was adopted as

the standard 32-bit programming interface,

with Win16 compatibility being preserved

through a technique known as "thunking".

A new GUI was not originally planned as part

of the release, although elements of the

Cairo user interface were borrowed and added

as other aspects of the release (notably

Plug and Play) slipped.

Microsoft did not change all of the Windows

code to 32-bit; parts of it remained

16-bit (albeit not directly using real mode)

for reasons of compatibility,

performance and development time. This, and

the fact that the numerous design flaws had

to be carried over from the earlier Windows

versions, eventually began to impact on the

operating system's efficiency and stability.

Microsoft marketing adopted Windows 95 as

the product name for Chicago when it was

released on August 24, 1995. Microsoft had

a double gain from its release: first it

made it impossible for consumers to run

Windows 95 on a cheaper, non-Microsoft

DOS; secondly, although traces of DOS were

never completely removed from the

system, and a version of DOS would be

loaded briefly as a part of the booting

process, Windows 95 applications ran

solely in 386 Enhanced Mode, with a flat

32-bit address space and virtual memory.

These features make it possible for Win32

applications to address up to 2 gigabytes

of virtual RAM (with another 2GB reserved

for the operating system), and in theory

prevents them from inadvertently

corrupting the memory space of other Win32

applications. In this respect the

functionality of Windows 95 moved closer

to Windows NT, although Windows 95/98/ME

does not support more than 512 megabytes

of physical RAM without obscure system

tweaks.

IBM continued to market OS/2, producing

later versions in OS/2 3.0 and 4.0

(also called Warp). Responding to

complaints about OS/2 2.0's high demands

on computer hardware, version 3.0 was

significantly optimized both for speed and

size. Before Windows 95 was released,

OS/2 Warp 3.0 was even shipped

preinstalled with several large German

hardware vendor chains. However, with the

release of Windows 95, OS/2 began to lose

marketshare.

It is probably impossible to nail down a

specific reason why OS/2 failed to gain

much marketshare. While OS/2 continued

to run Windows 3.1 applications, it lacked

support for anything but the Win32s subset

of Win32 API (see above). Unlike with

Windows 3.1, IBM did not have access to

the source code for Windows 95 and was

unwilling to commit the time and resources

to emulate the moving target of the

Win32 API. IBM also introduced OS/2 into

the United States v. Microsoft case,

blaming unfair marketing tactics on

Microsoft's part, but many people would

probably agree that IBM's own marketing

problems and lack of support for

developers contributed at least as much

to the failure.Microsoft released five

different versions of Windows 95:

Windows 95 - original release

Windows 95 A - included Windows 95 OSR1

slipstreamed into the installation.

Windows 95 B - (OSR2) included several

major enhancements, Internet Explorer

(IE) 3.0 and full FAT32 file system

support.

Windows 95 B USB - (OSR2.1) included

basic USB support.

Windows 95 C - (OSR2.5) included all the

above features, plus IE 4.0. This was

the last 95 version produced.

OSR2, OSR2.1, and OSR2.5 were not

released to the general public, rather,

they were available only to OEMs that

would preload the OS onto computers. Some

companies sold new hard drives with OSR2

preinstalled (officially justifying this

as needed due to the hard drive's

capacity). This product was sold after

the name Windows 97 in some countries

in Europe.The first Microsoft Plus!

add-on pack was sold for Windows 95.

Windows NT 4.0

Originally developed as a part of its

effort to introduce Windows NT to the

workstation market,[citation needed]

Microsoft released Windows NT 4.0, which

features the new Windows 95 interface

on top of the Windows NT kernel

(a patch was available for developers

to make NT 3.51 use the new UI, but it

was quite buggy; the new UI was first

developed on NT[citation needed] but

Windows 95 was released before NT 4.0).

Windows NT 4.0 came in four versions:

Windows NT 4.0 Workstation

Windows NT 4.0 Server

Windows NT 4.0 Server, Enterprise Edition

(includes support for 8-way SMP and

clustering)

Windows NT 4.0 Terminal Server

Windows 98

On June 25, 1998, Microsoft released

Windows 98, which was widely regarded as

a minor revision of Windows 95, but

generally found to be more stable and

reliable than its 1995 predecessor. It

includes new hardware drivers and better

support for the FAT32 file system which

allows support for disk partitions

larger than the2 GB maximum accepted by

Windows 95. The USB support in Windows

98 is far superior

to the token, sketchy support provided

by the OEM editions of Windows 95. It also

controversially integrated the Internet

Explorer browser into the Windows GUI and

Windows Explorer file manager, prompting

the opening of the United States v.

Microsoft case, dealing with the question

whether Microsoft was abusing its hold

on the PC operating system market to

unfairly compete with companies such as

Netscape.

In 1999, Microsoft released Windows 98

Second Edition, an interim release whose

most notable feature was the addition

of Internet Connection Sharing, which

was a form of network address

translation, allowing several machines

on a LAN (Local Area Network) to share

a single Internet connection. Hardware

support through device drivers was

increased. Many minor problems present

in the original Windows 98 were found

and fixed which make it, according to

many, the most stable release of

Windows 9x.

Windows 2000

Windows 2000 (also referred to as Win2K) is a

preemptive, interruptible, graphical and

business-oriented operating system designed

to work with either uniprocessor or symmetric

multi-processor computers. It is part of the

Microsoft Windows NT line of operating

systems and was released on February 17,

2000. It was succeeded by Windows XP in

October 2001 and Windows Server 2003 in

April 2003. Windows 2000 is classified as

a hybrid kernel operating system.

Four editions of Windows 2000 have been

released: Professional, Server, Advanced

Server, and Datacenter Server.

Additionally, Microsoft offered Windows

2000 Advanced Server Limited Edition and

Windows 2000 Datacenter Server Limited Edition,

which were released in 2001 and run on 64-bit

Intel Itanium microprocessors.[4] Whilst all

editions of Windows 2000 are targeted to

different markets, they each share a core set

of common functionality, including many system

utilities such as the Microsoft Management

Console and standard system administration

applications. Support for people with

disabilities was improved over Windows NT 4.0

with a number of new assistive technologies,

and Microsoft included increased support for

different languages and locale information.

All versions of the operating system support

the Windows NT filesystem, NTFS 3.0,[5] the

Encrypting File System, as well as basic and

dynamic disk storage. The Windows 2000 Server

family has additional functionality, including

the ability to provide Active Directory

services (a hierarchical framework of

resources) , Distributed File System (a file

system that supports sharing of files) and

fault-redundant storage volumes. Windows 2000

can be installed and deployed to corporate

desktops through either an attended or

unattended installation. Unattended

installations rely on the use of answer files

to fill in installation information, and can

be performed through a bootable CD using

Microsoft Systems Management Server, by the

System Preparation Tool. Windows 2000 is the

last NT-kernel based version of Microsoft

Windows that does not include Windows Product

Activation.

At the time of its release, Microsoft marketed

Windows 2000 as the most secure Windows

version they had ever shipped,[6] however it

became the target of a number of high-profile

virus attacks such as Code Red and Nimda. More

than seven years after its release, it

continues to receive patches for security

vulnerabilities on a near-monthly basis.

Microsoft released Windows 2000, known during

its development cycle as "NT 5.0", in

February 2000. It was successfully deployed

both on the server and the workstation markets.

Amongst Windows 2000's most significant new

features was Active Directory, a near-complete

replacement of the NT 4.0 Windows Server domain

model, which built on industry-standard

technologies like DNS, LDAP, and Kerberos

to connect machines to one another. Terminal

Services, previously only available as a

separate edition of NT 4, was expanded to all

server versions. A number of features from

Windows 98 were incorporated as well, such as

an improved Device Manager, Windows Media

Player, and a revised DirectX that made it

possible for the first time for many modern

games to work on the NT kernel. Windows 2000

is also the last NT-kernel Windows operating

system to lack Product Activation.

While Windows 2000 could upgrade a computer

running Windows 98, Windows 2000 was not

widely regarded as a product suitable for

home users. The reasons for this were many,

chief amongst them the lack of device drivers

for many common consumer devices such as

scanners and printers (at the time of

release; situation reversed when Windows XP

was released).

Windows 2000 was available in six editions:

Windows 2000 Professional

Windows 2000 Server

Windows 2000 Advanced Server

Windows 2000 Datacenter Server

Windows 2000 Advanced Server Limited Edition

Windows 2000 Datacenter Server Limited Edition

Windows Millennium Edition (Me)

In September 2000, Microsoft introduced

Windows Me (Millennium Edition), which upgraded

Windows 98 with enhanced multimedia and

Internet features. It also introduced the first

version of System Restore, which allowed users

to revert their system state to a previous

"known-good" point in the case of system failure.

System Restore was a notable feature that made

its way into Windows XP. The first version of

Windows Movie Maker was introduced as well.

Windows Me was conceived as a quick one-year

project that served as a stopgap release between

Windows 98 and Windows XP. Many of the new

features were available from the Windows Update

site as updates for older Windows versions,

(System Restore was an exception). As a result,

Windows Me was not acknowledged as a unique OS

(Operating System) along the lines of 95 or 98.

Windows Me was widely criticised for serious

stability issues, and for lacking real mode DOS

support, to the point of being referred to as

the "Mistake Edition". Windows Me was the last

operating system to be based on the Windows 9x

(monolithic) kernel and MS-DOS. It is also the

last Windows operating system to lack Product

Activation.

Windows XP

Windows XP is a line of operating systems

developed by Microsoft for use on general-

purpose computer systems, including home and

business desktops, notebook computers, and

media centers. The letters "XP" stand for

eXPerience.[2] It was codenamed "Whistler",

after Whistler, British Columbia, as many

Microsoft employees skied at the Whistler-

Blackcomb ski resort during its development.

Windows XP is the successor to both Windows

2000 Professional and Windows Me, and is the

first consumer-oriented operating system

produced by Microsoft to be built on the

Windows NT kernel and architecture. Windows

XP was first released on October 25, 2001,

and over 400 million copies were in use in

January 2006, according to an estimate in

that month by an IDC analyst.[3] It is

succeeded by Windows Vista, which was

released to volume license customers on

November 8, 2006, and worldwide to the

general public on January 30, 2007.

The most common editions of the operating

system are Windows XP Home Edition, which

is targeted at home users, and Windows XP

Professional, which has additional

features such as support for Windows Server

domains and two physical processors, and

is targeted at power users and business

clients. Windows XP Media Center Edition

has additional multimedia features enhancing

the ability to record and watch TV shows,

view DVD movies, and listen to music.

Windows XP Tablet PC Edition is designed to

run the ink-aware Tablet PC platform. Two

separate 64-bit versions of Windows XP were

also released, Windows XP 64-bit Edition

for IA-64 (Itanium) processors and Windows

XP Professional x64 Edition for x86-64.

Windows XP is known for its improved stability

and efficiency over the 9x versions of

Microsoft Windows. It presents a significantly

redesigned graphical user interface, a change

Microsoft promoted as more user-friendly than

previous versions of Windows. New software

management capabilities were introduced to

avoid the "DLL hell" that plagued older

consumer-oriented 9x versions of Windows. It is

also the first version of Windows to use

product activation to combat software piracy,

a restriction that did not sit well with some

users and privacy advocates. Windows XP has

also been criticized by some users for security

vulnerabilities, tight integration of

applications such as Internet Explorer and

Windows Media Player, and for aspects of its

default user interface.

Windows XP had been in development since early

1999, when Microsoft started working on Windows

Neptune, an operating system intended to be the

"Home Edition" equivalent to Windows 2000

Professional. It was eventually merged into the

Whistler project, which later became Windows XP.

In 2001, Microsoft introduced Windows XP

(codenamed "Whistler"). The merging of the

Windows NT/2000 and Windows 95/98/Me lines was

achieved with Windows XP. Windows XP uses the

Windows NT 5.1 kernel, marking the entrance of

the Windows NT core to the consumer market, to

replace the aging 16/32-bit branch. Windows XP

was current longer than any other version of

Windows, from 2001 all the way to 2007 when

Windows Vista was released to consumers. The

Windows XP line of operating systems was

surpassed by Windows Vista on January 30, 2007.

Windows XP is available in a number of versions:

"Windows XP Home Edition", for home desktops and

laptops (notebooks)

"Windows XP Home Edition N", as above, but without

a default installation of Windows Media Player,

as mandated by a European Union ruling "Windows XP

Professional", for business and power

users

"Windows XP Professional N", as above, but without

a default installation of Windows Media Player,

as mandated by a European Union ruling

Windows XP Media Center Edition (MCE), released

in November 2002 for desktops and notebooks with

an emphasis on home entertainment

Windows XP Media Center Edition 2003

Windows XP Media Center Edition 2004

Windows XP Media Center Edition 2005, released on

October 12, 2004.

"Windows XP Tablet PC Edition", for tablet PCs

(PCs with touch screens)

Windows XP Tablet PC Edition 2005

Windows XP Embedded, for embedded systems

"Windows XP Starter Edition", for new computer

users in developing countries

Windows XP Professional x64 Edition, released

on April 25, 2005 for home and workstation

systems utilizing 64-bit processors based on

the x86-64 instruction set (AMD calls this

AMD64, Intel calls it Intel 64)

Windows XP 64-bit Edition, is a version for

Intel's Itanium line of processors; maintains

32-bit compatibility solely through a

software emulator. It is roughly analogous

to Windows XP Professional in features. It

was discontinued in September 2005 when the

last vendor of Itanium workstations stopped

shipping Itanium systems marketed as

"Workstations".

Windows XP 64-bit Edition 2003, based on the

Windows NT 5.2 codebase.

Windows Server 2003

On April 24, 2003 Microsoft launched Windows

Server 2003, a notable update to Windows 2000

Server encompassing many new security features,

a new "Manage Your Server" wizard that

simplifies configuring a machine for specific

roles, and improved performance. It has the

version number NT 5.2. A few services not

essential for server environments are disabled

by default for stability reasons, most

noticeable are the "Windows Audio" and "Themes"

services; Users have to enable them manually to

get sound or the "Luna" look as per Windows XP.

The hardware acceleration for display is also

turned off by default, users have to turn the

acceleration level up themselves if they trust

the display card driver.

In December 2005, Microsoft released Windows

Server 2003 R2, which is actually SP1 (Service

Pack 1) plus an add-on package. Among the new

features are a number of management features

for branch offices, file serving, and

company-wide identity integration.

Windows Server 2003 is available in five editions:

Web Edition

Standard Edition

Enterprise Edition (32 and 64-bit)

Datacenter Edition

Small Business Server

Thin client:

Windows Fundamentals for Legacy PCs

In July 2006, Microsoft released a thin-client

version of Windows XP Service Pack 2, called

Windows Fundamentals for Legacy PCs (WinFLP).

It is only available to Software Assurance

customers. The aim of WinFLP is to give

companies a viable upgrade option for older PCs

that are running Windows 95, 98, and Me that

will be supported with patches and updates for

the next several years. Most user applications

will typically be run on a remote machine using

Terminal Services or Citrix

Windows Vista

The current client version of Windows, Windows

Vista was released on November 30, 2006[1] to

business customers, with consumer versions

following on January 30, 2007. Windows Vista

intends to have enhanced security by introducing

a new restricted user mode called User Account

Control, replacing the "administrator-by-default"

philosophy of Windows XP. Vista also features

new graphics features, the Windows Aero GUI,

new applications (such as Windows Calendar,

Windows DVD Maker and some new games including

Chess, Mahjong, and Purble Place), a revised and

more secure version of Internet Explorer, a new

version of Windows Media Player, and a large

number of underlying architectural changes.

Windows Vista ships in several editions:

Starter

Home Basic

Home Premium

Business

Enterprise

Ultimate

Starter

Home Basic

Home Premium

Business

Enterprise

Ultimate

Windows Home Server

Windows Home Server (codenamed Q, Quattro)

is a server product based off Windows Server

2003, designed for consumer use. The system

was announced on January 7, 2007 by Bill Gates.

Windows Home Server can be configured and

monitored using a console program that can be

installed on a client PC. Such features as

Media Sharing, local and remote drive backup

and file duplication are anticipated.

Future development

Windows Server 2008

Windows Server 2008, now scheduled for release

on February 27, 2008, was originally known as

Windows Server Codename "Longhorn". Windows

Server 2008 builds on the technological and

security advances first introduced with Windows

Vista, and aims to be significantly more modular

than its predecessor, Windows Server 2003.

Future development

Windows 7

The next major release after Windows Vista is

known internally as Windows 7. It was previously

known by the code-names Blackcomb and Vienna.

GUI of Microsoft Windows

Microsoft modeled the first version of Windows,

released in 1985, on the GUI of the Mac OS.

[citation needed] Windows 1.0 was a GUI for the

MS-DOS operating system that had been the OS of

choice for IBM PC and compatible computers since

1981. Windows 2.0 followed, but it wasn't until

the 1990 launch of Windows 3.0, based on Common

User Access that its popularity truly exploded.

The GUI has seen major and minor redesigns since,

notably the addition of spatial file management

capabilities akin to the Macintosh Finder in

Windows 95, in Windows Explorer; the contentious

web browser integration in Windows 98; the

subsequent transition away from spatial file

management more towards a single-window,

task-based interface with Windows XP; and the

removal of the browser integration in Windows

Vista.

Windows traditionally differed from other GUIs

in that it encouraged using applications maximized

[clarify], as evident even in this early Windows

1.01 screenshot. The users usually switch between

maximized applications using Alt+Tab keyboard

shortcut or by clicking on a Taskbar listing all

open applications, as opposed to clicking on a

partially visible window, as is more common in some

other GUIs.

In 1988, Apple sued Microsoft for copyright

infringement of the LISA and Apple Macintosh GUI.

The court case lasted 4 years before almost all

of Apple's claims were denied on a contractual

technicality. Subsequent appeals by Apple were

also denied, and Microsoft and Apple apparently

entered a final, private settlement of the matter

in 1997 as a side note in a broader announcement

of investment and cooperation.

GEOS

GEOS was launched in 1986. Originally written for

the 8 bit home computer Commodore 64 and shortly

after, the Apple II series it was later ported

to IBM PC systems. It came with several

application programs like a calendar and word

processor, and a cut-down version served as the

basis for America Online's DOS client. Compared

to the competing Windows 3.0 GUI, it could run

reasonably well on simpler hardware.

Revivals were seen in the HP OmniGo handhelds,

Brother GeoBook line of laptop-appliances, and

the New Deal Office package for PCs. Related

code found its way to earlier 'Zoomer' PDAs,

creating an unclear lineage to Palm, Inc.'s later

work. Nokia used GEOS as a base operating system

for their Nokia Communicator series, before

switching to EPOC (Symbian).

The 90s: Mainstream usage of the desktop

The widespread adoption of the PC platform at

homes and small business popularized computers

among people with no formal training. This

created a fast growing market, opening an

opportunity for commercial exploitation and of

easy-to-use interfaces and making economically

viable the incremental refinement of the existing

GUIs for home systems.

Windows 95 and "a computer in every home"

After Windows 3.11, Microsoft began to develop a

new consumer oriented version of the operating

system. Windows 95 was intended to integrate

Microsoft's formerly separate MS-DOS and Windows

products and includes an enhanced version of DOS,

often referred to as MS-DOS 7.0. It features

significant improvements over its predecessor,

Windows 3.1, most visibly the graphical user

interface (GUI) whose basic format and structure

is still used in later versions such as Windows

Vista. In the marketplace, Windows 95 was an

unqualified success, and within a year or two of

its release had become the most successful

operating system ever produced.

Windows 95 saw the beginning of the Browser wars

when the World Wide Web gradually began receiving

a great deal of attention in the popular culture

and mass media. Microsoft at first did not see

potential in the Web and Windows 95 was shipped

with Microsoft's own online service called The

Microsoft Network, which was dial-up only and was

used primarily for its own content, not internet

access. As versions of Netscape Navigator and

Internet Explorer were released at a rapid pace

over the following few years, Microsoft used its

desktop dominance to push its browser and shape t

he ecology of the web mainly as a monoculture

The X Window System

X11 Desktop (running the KDE Desktop with KWin

window manager).The standard windowing system in

the Unix world is the X Window System (commonly